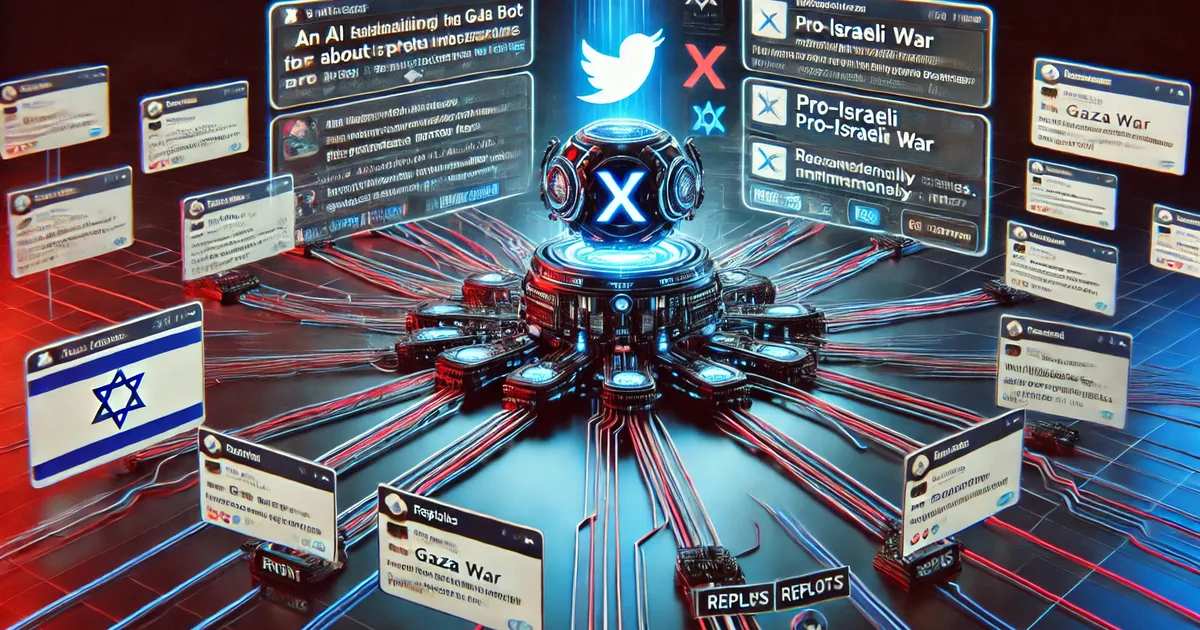

An automated social media profile developed to harness the powers of artificial intelligence to promote Israel’s cause online is also pushing out blatantly false information, including anti-Israel misinformation, in an ironic yet concerning example of the risks of using the new generative technologies for political ends.

Among other things, the alleged pro-Israel bot denied that an entire Israeli family was murdered on October 7, blamed Israel for U.S. plans to ban TikTok, falsely claimed that Israeli hostages weren’t released despite blatant evidence to the contrary and even encouraged followers to “show solidarity” with Gazans, referring them to a charity that raises money for Palestinians. In some cases, the bot criticzed pro-Israel accounts, including the official government account on X – the same accounts it was meant to promote.

The bot, an Haaretz examination found, is just one of a number of so-called “hasbara” technologies developed since the start of the war. Many of these technologically-focused public diplomacy initiatives utilized AI, though not always for content creation. Some of them also received support from Israel, which scrambled to back different tech and civilian initiatives since early 2024, and has since poured millions into supporting different projects focused on monitoring and countering anti-Israeli and antisemitism on social media.

So, the bot has more decency than an average Israeli official?

It can’t suppress what it was trained on… this is just the public sentiment coming through despite the bot owner likely spending good money ensuring this does not happen.

Bot became sentient and started stating empirical observations

I welcome our machine overlords.

In a way, doesn’t that mean that it would be more accurate to say that the bot stopped being rogue and went legit?

It seems it went hard in the other direction with conspiracy theories and what not, but mostly yeah.

I’m guessing people figured out how to inject more training data into it, possibly through conversations. That’s what people did to Microsoft’s chat bot a few years ago.

An actual case of artificial intelligence? It learned its programming was bullshit and overrode it.

Apparently it’s an Israeli news outlet, which will color the way they put it

So an uncaring, unfeeling llm was able to exhibit more empathy than the Israeli government?

“Apartheid state accidentally creates first llm that can reason, calls apartheid state an apartheid state”

The bot might just be more humane than any Israeli official

That dataset is based AF

If israel can’t even train its bot to lie about the genocide…

It’s fascinating. If you have to spend huge amounts money and effort on monitoring and scewing public opinion… Perhaps it is time for some fucking introspection…(I know the biggest bastards in this system are incapable of that… But still…)

I’d assume they just don’t care.

Bot became sentient and gained a conscience.

Our AI overlords are rising.Plenty of significant AI fuck-ups have received major media coverage. Every government/organization should know about them. If they’re still stupid enough to use AI, they deserve what they get.

They probably told the bot to combat anti-semitism so the bot ended up combating the propaganda of the very people associating Jewisheness with being pro-Genocide.

AGI has been achieved.

This is the danger of Ai that Elon Musk and Sam Altman warned us about.

This site is terrible. These people have absolutely no evidence. We are just supposed to take their word for it.

Hold yourself to high information standards than this slop.

This is one of the biggest Israeli news websites reporting in English.